Creating therapeutic abundance

On limiting reagents in the medicine production function

tl;dr

The invention of new medicines is rate limited by our knowledge of cells and molecules ("targets") that we can manipulate to treat disease. The cost of discovering new medicines has increased because the lowest hanging fruit has been picked on the tree of ideas. Emerging technologies at the intersection of artificial intelligence & genomics have the potential to unlock a new era of target abundance, potentially reversing the decade's long decline in R&D productivity. If realized, this will be one of the most important impacts of AI over the coming decades.

Eroom's law

Gordon Moore famously predicted in 1965 that the number of transistors per integrated circuit would double every two years. The computing industry delivered.

Jack Scannell infamously predicted in 2012 that the number of drugs per billion dollars would decline two-fold every nine years. Unfortunately, our therapeutics industry has largely followed through1.

Why has this happened?

Eroom's law contains within it multiple emerging problems in our industry – rising costs for R&D and declining success rates per drug program.

Rising R&D costs have many sources. A plurality likely trace back to Baumol's cost disease.2 Cost disease applies throughout the economy though, so on the surface, drug development's unique problems might be more directly tied to the high rate of failure for new candidate medicines.

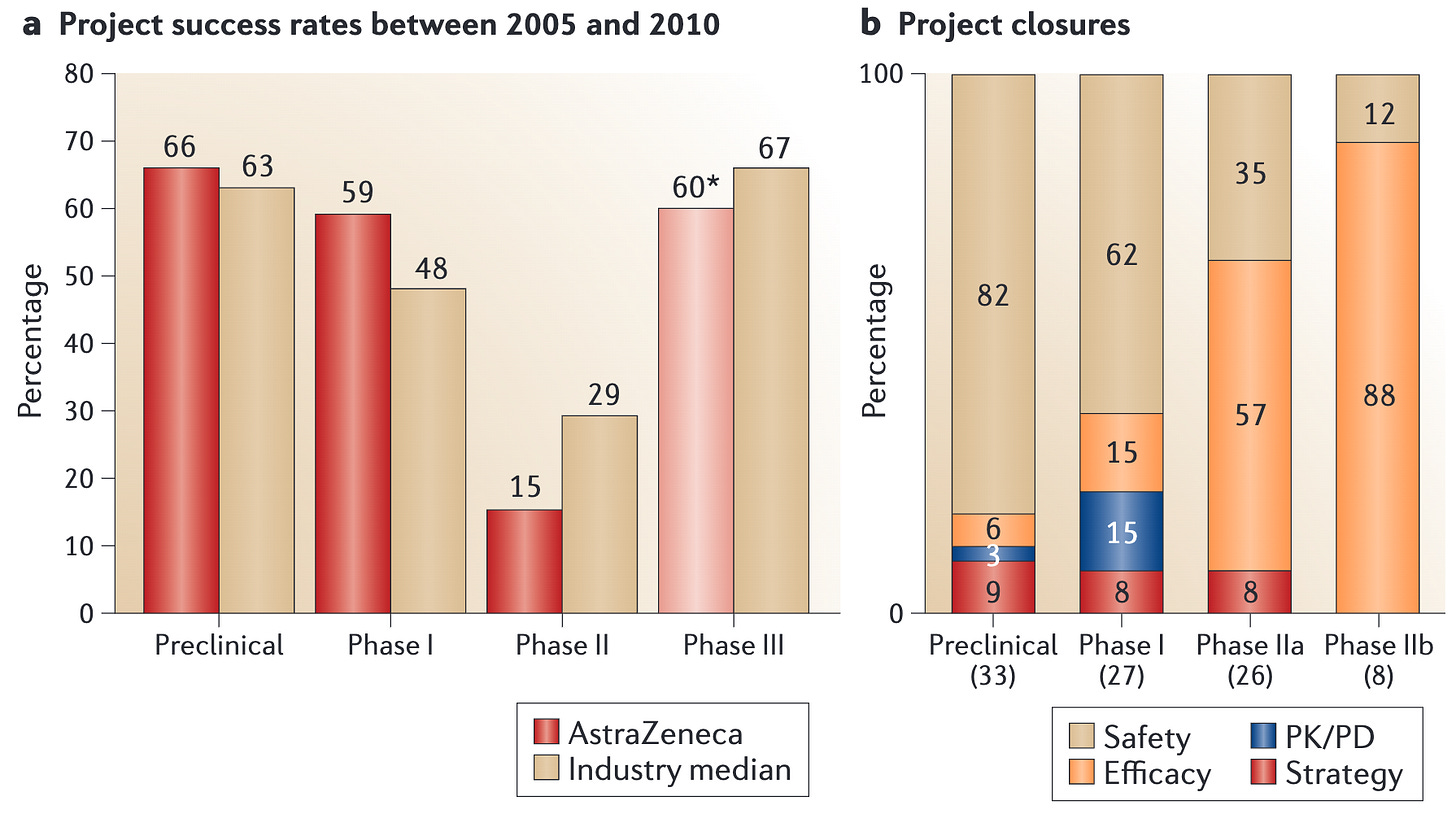

Drug program success rates are equally complex. Failures can be attributed to safety issues, failure of a drug to hit the desired biological target, or improper selection of the target for a given disease.

Ascribing exact values to the frequency of each of these failures is challenging. Most of the knowledge of drug program lifecycles remains locked within drug companies. Nonetheless, we can bucket the failures into a two broad categories of safety and efficacy and make informed estimates.

Safety failures – ~20-30% of all candidates

A molecule was developed, but proved unsafe in patients. These are typically detected as failures in Phase 1 trials.Efficacy failures – 70-80% of all candidates

The remainder of all drug candidates that fail – 63% of all drugs placed into trials period – fail due to a lack of efficacy. Even though the drugs are safe, they don't provide benefit to the patients by treating their disease.

From these coarse numbers, it's clear that the highest leverage point in our drug development process is increasing the efficacy rate of new candidate medicines.3

This fact shows up clearly in clinical trial results. The plurality of medicines fail in Phase 2 trials, the first time efficacy is measured, the first time we test the hypothesis of whether manipulating a given biological target will actually benefit patients4.

This stands in contrast to some rhetoric in the ecosystem claiming that an undue regulatory burden in the US market (where >50% of revenues arise) is the main challenge holding back drug development. If this were true, you'd expect to see amazing therapies that are available exclusively in ex-US geographies with simpler regulatory schemes. The absence of these medicines suggests that regulatory changes alone are insufficient to fix our therapeutic development challenge, even if they could prove an accelerant.

Rather, our main challenges are scientific. We simply don't know how to make effective drugs that preserve health or reverse disease! If we want more medicines, we need to understand why they don't work and fix it.

Why don't our candidate medicines work?

Efficacy failures can broadly occur for two reasons:

Engagement failures: We chose the right biology ("target") to manipulate, but our drug candidate failed to achieve the desired manipulation. This is the closest thing drug development has to an engineering problem.

Target failures: The drug candidate manipulated our chosen biology exactly as expected. Unfortunately, the target failed to have the desired effect on the disease. This is a scientific or epistemic failure, rather than an engineering problem. We simply failed to understand the biology well enough to intervene and benefit patients.

It's difficult to know exactly the exact frequency of these two failure modes, but we can infer from a few sources that target failures dominate.

Success rates for biosimilar drugs hitting known targets are extremely high, >80%5

Drugs against targets with genetic evidence have a 2-3 fold higher success rate than those against targets lacking this evidence, suggesting that picking good targets is a high source of leverage6

Among organizations with meaningful internal data, picking the right target is considered the first priority of all programs (e.g. "Right target" is the first tenet of AstraZeneca's "5Rs" framework)7.

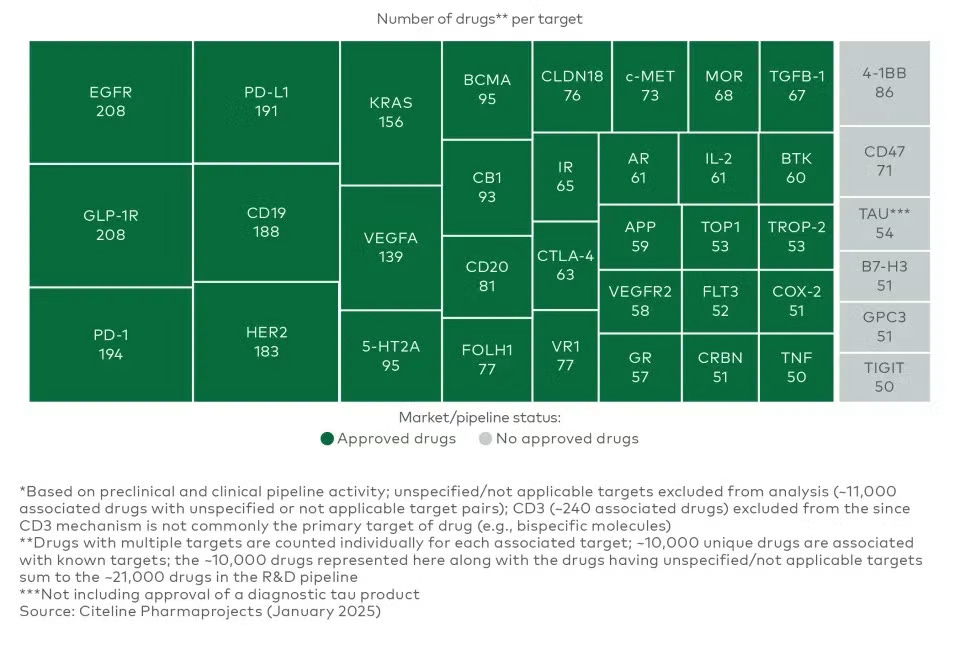

The predominance of target failures has likewise led most companies working on new modalities to address a small set of targets with well-validated biology. This has led to dozens of potential medicines "crowding" on the same targets, and this trend is increasing over time8. A recent report from LEK demonstrates just how pronounced this trend has become. As a complement to rigorous academic and market research, simply scanning the pipeline pages of biotechs will convince an interested reader that this phenomenon is very real.

Crowding on known targets is perhaps the strongest integrated signal that target failures are the predominant reason our medicines don't work in the clinic. Many distinct teams of incredibly smart people have aggregated all information available and concluded that target discovery is so fraught, they would prefer to take on myriad market risks to avoid it.

Are targets getting harder to find?

If searching for targets is the limiting reagent in our medicine production function, the difficulty of finding targets must increase over time in order to explain part of Eroom's law. How could this be the case given all the improvements in underlying biomedical science?

In an influential paper "Are ideas getting harder to find?", Nicholas Bloom and colleagues argue that many fields of invention suffer from diminishing returns to investment. Intuitively, the low hanging fruit in a given discipline is picked early and more investment is required merely to reap the same harvest from higher branches on the tree of ideas.

In therapeutics, we can imagine concrete examples to explain how this might be the case. At the beginning of the Eroom's law data series in the 1950s, the most successful new medicines were broad spectrum antibiotics. In the 1960s and 1970s, several new medicines targeted the central dimorphic sexual hormones (estrogen and testosterone agonists and antagonists). The 1980s saw successful antivirals for HIV and early biologics for central signaling hormones (insulin, growth hormone, erythropoeitin).

It's striking from this sort of survey that infectious disease and circulating hormone targets dominated the first several decades of modern drug discovery. These targets are the most obvious examples of low hanging fruit in the industry. Infectious diseases have a small number of genes – making targets relatively easy to find – and their biology is divergent from our own, so they are uniquely straightforward to drug safely. It's easier to find a safe inhibitor of a gene if the gene only exists in a pathogen, and not normal human cells.

Hormones are likewise simple to identify because they circulate in the blood and their levels can be measured longitudinally. They are simple to drug because their structures are comparatively simple and the biology is "designed" for a single molecule to evoke a complex phenotype. Early recombinant DNA companies Genentech and Amgen both chose to develop hormone drugs because the genes were small, and therefore easier to clone and manufacture9.

The common diseases that predominate as ailments today are far more complex. Targets are getting harder to find not because we are getting worse at selection, but because many of the easy and obvious therapeutic hypotheses have already been exploited.

Inventing medicines that match nature's complexity

Accelerating drug discovery will require us to discover "targets" more effectively. Not only will this involve improving our traditional target identification processes, but changing our definition of a target altogether.

Today, we typically conceive of targets as single gene or molecule that we can manipulate to achieve a therapeutic goal. This conception likely needs to be broken to access the metaphorical fruit higher on the tree.

Aging and disease involve the complex interplay of molecular circuits. Outside of infectious and inherited monogenic diseases, there are few health problems that arise as the result of a single molecule that is too high or too low in abundance. Preserving health and enhancing our physiology will require us to match the complexity of our biology with the complexity of our medicines. We need to stop thinking about targets as single molecules and begin to imagine therapeutic hypotheses that rely on combinations of genes, engineered cellular behaviors, and remodeling of tissues.

This point seems obvious. Why haven't we developed medicines like this to date?

The origins of our contemporary targets

Most of our current targets emerged from a stochastic research process. Namely, academic researchers explore the biology of a disease, then eventually identify a molecule that is necessary or sufficient for the pathology to manifest. Each of these molecules are typically proposed through a heuristic process.

Concretely, a scientist sits and thinks hard about the problem, makes a guess at the responsible molecular players based on their intuition, prior art, and their new data, then tests to see if the molecule is causal. The vast majority of these hypotheses are wrong! The few that prove to be correct often become the basis of our modern target-based drug discovery process and several companies quickly launch programs to prosecute them. This approach yielded targets like PD-1, CD19, VEGFR2, and BTK within the sphere of crowded targets today.

Despite its successes, this method has a few key limitations that explain why our current targets are so tightly constrained.

The throughput of target:disease pairs tested in this fashion and the efficiency in terms of dollars per target discovered are fairly low10.

Given the low throughput, it's nearly impossible to test hypotheses that involve manipulating biology in a manner more complex than dialing a single target all the way up (overexpression, drug-like agonism) or all the way down (genetic knockout, drug-like inhibition). This inherently limits us to discovering targets that are far more reductionist than the actual biology we hope to manipulate.

Distilling natural experiments

The sparsity of target space has been an acknowledged problem in the industry for decades. Shortly after the conclusion of the Human Genome Project, large scale human genetic studies appeared to offer one possible answer to the problem.

Each human genome contains more than a million variants relative to the representative “reference,” genome. These variants serve as a form of natural experiment, one of the only sources of information on the effect of manipulating a given gene in humans.

Given a large number of human genomes paired with medical records, researchers can draw associations between genetic variants and human health. Variants can then be associated to genes, and researchers can discover targets that may exacerbate or prevent a given disease. This approach has successfully yielded some of the now crowded targets in today’s pantheon, including PCSK9.

A whole cohort of companies (Celera, deCODE, Incyte, Millennium, Myriad) was created to leverage this new resource. It might seem surprising at first blush that genetic methods haven’t changed the course of R&D productivity.

While promising, human genetics can only reveal a certain class of targets. The larger the effect size of a genetic variant, the less frequently it appears in the population due to selective pressure. In effect, this means that the largest effects in biology are the least likely to be discovered using human genetics. Many of the best known targets have minimal genetic signal for this reason.

Our current methods are good at discovering individual genes that associate with health, but discovering combinations of genes is nascent at best. Human genetics cannot help us discover the combinatorial medicines or gene circuits to install in a cell therapy.

Sociologically, discovering drug targets with human genetics has become something of a consensus opinion. Most large drug discovery firms have teams dedicated to this approach. This has contributed to the crowding problem, leading many firms to address the same set of targets within the constraints of genetic discovery. These medicines can certainly be impactful, but it seems unlikely that 10+ medicines targeting PCSK9 is the optimal resource allocation for patients.

Building systems of discovery

Is it possible to build a more deterministic, less constrained discovery process? Can we discover target biologies with a complexity matching the origins of disease?

Two technological revolutions argue in the affirmative. Functional genomics methods now enable us to test far more hypotheses than ever before. From the resulting data corpuses, artificial intelligence models can search otherwise intractably large hypothesis spaces, like the space of possible genetic circuits or combinatorial therapies11. By performing most experiments in the world of bits rather than atoms, it’s possible to address questions that were inaccessible to a previous generation of scientists.

Functional genomics use DNA sequencing ("reading") and synthesis ("writing") technologies to parallelize experiments at the level of cells and molecules. Rather than running each experiment in a unique test tube to keep track of the conditions, experimental details are encoded in DNA basepairs within a cell or molecule, then read-out by sequencing.

In practice, this allows researchers to treat the cell as the unit of experimentation, increasing the throughput of many target discovery questions by 100-1000X. These methods aren't applicable to every target discovery problem (e.g. some pathologies only manifest across tissue systems), but they nonetheless unlock a class of putative interventions that were previously too numerous to search effectively.

It's reasonable to think about these methods as a way of making traditional "perturbation" experiments that teach us how biological systems work12 amenable to the multiplexing benefits of DNA sequencing. The cost of DNA sequencing is falling over time, so this provides a tailwind to our ability to discover new target biologies for therapeutics. This is just one way that solving engineering problems can accelerate progress on the distinct and more challenging scientific problems facing our industry.

Even with the best possible experimental methods, some of the most promising target biologies will never be searched exhaustively. There are a nearly infinite number of combinatorial genetic interventions we might drug, synthetic circuits we might engineer into cells, and changes in tissue composition we might engender.

Artificial intelligence models can learn general models from the data generated in functional genomics experiments of many flavors, predicting outcomes for the experiments we haven't yet run. If we manage to construct a performant model for a given class of target biologies, we may be able to increase the efficiency of target discovery by many orders-of-magnitude. The cost of discovering a target could conceivably go from >$1B to <$1M.

There's growing interest in the idea of combining these technologies to build "virtual cells," models that can predict the outcomes of target discovery experiments in silico before they're ever executed in the lab. The grand version of this vision spans all possible target biologies, from gene inhibitions to polypharmaceutical small molecule treatments. In the maximal form, it may take many years to realize.

More limited realizations though are tractable today. The initial versions of these models are already emerging within early Predictive Biology companies. As a few examples, Recursion is building models of genetic perturbations in cancer cells, Tahoe Tx is building models in oncology with a chemical biology approach, and NewLimit has developed models for reprogramming cell age across human cell types13. Focused models like these represent an early demonstration that this general approach can yield therapeutic value.

These technologies have only emerged in the last 5-10 years. This may seem like old news from an academic perspective, but drug discovery cycles are on the order of a decade. We are only now beginning to reap the first harvest from this approach. We've begun to see the first medicines addressing emerging target biologies in the clinic, including complex cell states and combinatorial nucleic acid interventions.

I'm hopeful that our ability to discover these complex target biologies will match our newfound skill in drugging them.

An era of target abundance

The data are quite compelling that target discovery is the limiting reagent in modern drug development. New technologies offer an opportunity to invert the curve of Eroom's law and arc toward progress. We have the potential to enter a future where targets are no longer rate limiting.

How should we allocate resources in light of this opportunity?

Science and therapeutic discovery are driven by pools of public (~$50B/year, US NIH + NSF), philanthropic ($1-2B/year), and private capital (~$5-10B/year, venture + IPOs). Of these, public financing is potentially the largest driver based on shear scale.

Philanthropic academic institutions (Arc, Broad, CZI) have already taken the first steps to pull this possible future forward. Both Arc and CZI have announced major initiatives to build models suitable for large scale target discovery, and the Broad recently launched an AI center that may engender similar progress.

Therapeutic discovery would benefit from public investment following suit. This will require institutions like the NIH to fund larger, team-oriented projects with more integrated support from computer science researchers than the traditional one PI, one R01 scheme that dominates the agency.

Private capital has begun to place the bets on this thesis, but a plurality of resources are still concentrated on prosecuting known targets. Even on the frontier of firms leveraging artificial intelligence (techbio firms, if you'll allow it), much capital is focused on designing new molecules to these old targets more expeditiously.

This likely stems from the fact that while therapeutic engineering has a lower expected value than prosecuting new targets, it likewise has lower volatility, and there are larger pools of capital available for low vol, low EV bets than high vol, high EV bets.

Biotechnology companies often take decades to turn a profit14. If you believe that the future of human health lies outside the narrow universe of known targets, it's rationale to allocate more resources in the direction of that emerging future, even if you believe it will take time to manifest.

Coda

Eroom’s law hangs heavily upon the neck of the biotech industry. Many have internalized it as if a form of gravity — immutable & recalcitrant to a fundamental understanding. In fact, it is neither. The slow down in R&D productivity over the past decades is primarily a limitation of our biological understanding, not the loss of a rare and essential element from the surface of the Earth or an impenetrable barrier of regulation.

Our industry has often reacted to this sense of inevitable decay by attempting to hide from risk. Rather than learning to ask better scientific questions, we’ve too often avoided asking any questions where the answers are not already known. This has resulted in hundreds of distinct therapies attempting to drug the same small group of biologies. It seems self-evident that this is not the allocation of resources that maximizes for the number of healthy years we deliver to the world.

We are entering an epoch of abundant intelligence. With these tools, we have the opportunity to discover & design target biologies at a rate that’s too cheap to meter. The therapies that emerge could serve as the counterexample that downgrades Eroom’s law to a historic conjecture.

If realized, the reignition our therapeutic discovery cadence would represent perhaps the most valuable output of the Intelligence Revolution now being rendered. There is no product more valuable than healthy time.

See Alex Telford's excellent summary of the modern biopharmaceutical development process for an explanation of this phenomenon. Credit to Alex for the image.

Baumol's cost disease is a phenomenon of rising costs across categories of goods & services in rich economies. In brief, as the amount of wealth that can be generated from the most productive activities rises, the opportunity costs of other activities rise as well. This is the basis for everyone's favorite cost over time infographic.

See Arrowsmith et al. 2011, Cook et. al. 2014, and Hay et. al. 2014 for analyses of drug failure rates. There are differences in these rates across therapeutic areas, target classes (known vs. novel), and drug modalities (small molecule, antibody, gene therapy, etc.), but the dominance of efficacy failures is paramount regardless of how you slice the subpopulations.

See Cook et. al. 2014, Dowden et. al. 2019, and Wong, Shah, & Lo 2019 for reviews of clinical trial success rates.

See Kirsch-Stefan et. al. 2023. The overwhelming majority of biosimilar monoclonal antibodies submitted to the European Medicines Agency (EU equivalent of the US FDA) received marketing approval.

See Cook et. al. 2014.

See Infinite Frontiers by Stephen S. Hall (Genentech) and Science Lessons by Gordon Binder (Amgen).

The dollars per target is hard to estimate directly. As a simple heuristic, the NIH budget is about $45B/year circa 2022. It's not unreasonable to assume a large fraction of this budget is dedicated to the traditional target identification process. Let's say ~10-20% to be conservative. This suggests we spend on the order of $4-8B/year on collective target discovery, and yet we yield only a few impactful targets per decade. This puts us easily into the realm of >$1B/target.

Shameless plugs: See Techbio is a speciation event and Predictive Biology for related discussion of how AI unlocks new biological questions.

In biology, we have two traditional ways of figuring out how things work ("establishing causality" in formal parlance). One is to follow systems over time. We know events in the past cause events in the future, so the arrow of time can turn correlative observations into causal associations. The other, more common mechanism is a perturbation experiment where a component is added to or removed from a system. Based on how the behavior of the system changes, we can determine what the component does. Functional genomics methods are largely focused on parallelizing the latter method by using DNA sequences rather than physical space to separate experiments.

disclosure: I co-founded & run NewLimit

Famously, Regeneron first posted a profit 24 years after founding.

Very thoughtful – thanks for sharing. When people ask why the biotech markets have been so stagnant, I reflexively say something about interesting rates. But I think that's largely cope and the real reasons are the ones you mention: our target discovery methods are no match for complex disease biology and we're running out of easy targets. Excited for your vision.

what an absolutely fantastic post, thank you so much for writing it.